Compute Engine

The ideal IaaS for your workload

Cost-effective vCPUs and powerful dedicated cores

Flexibility with no minimum contract

24/7 expert support included

Benefits of the Compute Engine

Select the exact resources you need. You're not restricted to pre-built instances.

Make your virtual machines available on public or private LANs as required. The granular configurable firewall, managed NAT gateway and DDoS protection ensure that the connection remains secure. The network load balancer guarantees its resilience.

With the Compute Engine, you get customized resources that are billed depending on the amount you use. Stop your VM to reduce costs and reactivate it when it’s needed again.

Use proven access protocols to manage your cloud securely and from any location.

Connect additional network cards and storage media as required in order to integrate your peripherals into your cloud infrastructure. These can be combined without any restrictions.

Choose your server type

Compute Engine can be used with two different processor variants: vCPU or Dedicated Core instances.

Our new vCPU servers are a cost-effective instance type. They offer perfect performance and scalability for a wide range of workloads and come at an affordable price.

Typical applications include:

Databases

Development and test environments

Websites with low traffic

Basic software services

Cache servers

eCommerce platforms

With Dedicated Core virtual machines, you benefit from a dedicated physical core with two hyper-threads.

They are particularly suited for performance-intensive applications and workloads that have high traffic or processing demands and require constant high-performance CPU provisioning, including:

Extensive databases

Data processing

Real time analyses

High-performance computing

Big data modeling

ERP systems

Real-time applications

High-traffic websites

Satisfied IONOS Cloud customers

IONOS is a pioneer of high-performance and future-proof cloud infrastructure.

The right CPU architecture for your workload

Our new cost-optimized vCPU instances are ideal for general VM workloads without particularly high demands or load peaks, such as web applications and websites with moderate data traffic, or development and test environments.

Or discover the full power of Dedicated Core instances (AMD or Intel®, depending on location) without performance restrictions. Even in smaller configurations, the IONOS Cloud gives you the same performance as higher-configured resources from US hyperscalers.

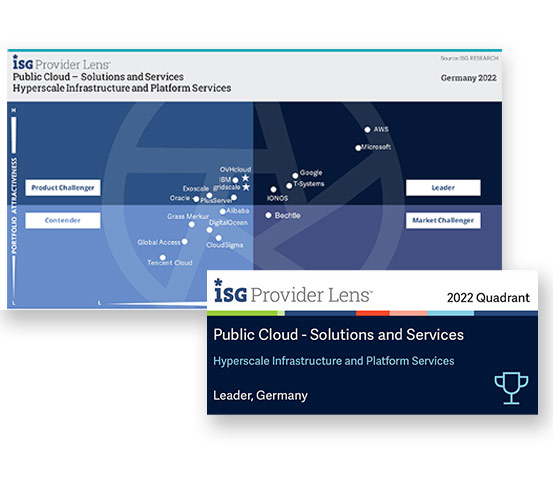

A leader in hyperscale infrastructure

Independent market analyses like the ISG Provider Lens show how IONOS stacks up against the biggest cloud providers. In 2022, we were named a Leader in the hyperscale infrastructure quadrant, and we’re working hard to maintain our peak position.

Compute Engine prices

With the IONOS Cloud, you always have the right server configuration for your needs and budget.

Enhance efficiency with competitive pricing. The Compute Engine with dedicated cores offers favorable rates compared to similar offers. We include all server components, including RAM, storage, outbound traffic, and service support, for any given instance when we assess our prices against other cloud providers.

Dedicated Core

Our Compute Engine is based on dedicated resources, where a virtual core is equivalent to a physical core. That means no noisy neighbors or overbooking.

Processor | Usage | Hyper-thread | Clock frequency | Number of cores | Price | |

|---|---|---|---|---|---|---|

AMD | EPYC (Milan) | Dedicated | Yes | 2.0 GHz | 1 – 62 (2 – 124 logical hyper-threads) | $0.045 / Core / hr. |

Intel® Xeon® | Haswell | Dedicated | Yes | 2.1 / 2.4 GHz | 1 – 51 (2 – 102 logical hyper-threads) | $0.05 / Core / hr. |

Intel® Xeon® | Skylake | Dedicated | Yes | 2.1 / 2.4 GHz | 1 – 51 (2 – 102 logical hyper-threads) | $0.05 / Core / hr. |

Intel® Xeon® | Ice Lake | Dedicated | Yes | 2.0 GHz | 1 – 62 (2 – 124 logical hyper-threads) | $0.05 / Core / hr. |

AMD | |

|---|---|

Processor | EPYC (Milan) |

Usage | Dedicated |

Hyper-thread | Yes |

Clock frequency | 2.0 GHz |

Number of cores | 1 – 62 (2 – 124 logical hyper-threads) |

Price | $0.045 / Core / hr. |

Intel® Xeon® | |

Processor | Haswell |

Usage | Dedicated |

Hyper-thread | Yes |

Clock frequency | 2.1 / 2.4 GHz |

Number of cores | 1 – 51 (2 – 102 logical hyper-threads) |

Price | $0.05 / Core / hr. |

Intel® Xeon® | |

Processor | Skylake |

Usage | Dedicated |

Hyper-thread | Yes |

Clock frequency | 2.1 / 2.4 GHz |

Number of cores | 1 – 51 (2 – 102 logical hyper-threads) |

Price | $0.05 / Core / hr. |

Intel® Xeon® | |

Processor | Ice Lake |

Usage | Dedicated |

Hyper-thread | Yes |

Clock frequency | 2.0 GHz |

Number of cores | 1 – 62 (2 – 124 logical hyper-threads) |

Price | $0.05 / Core / hr. |

Please note: The Intel cores offered by IONOS Cloud use hyper-threading. A single physical Intel® Core™ is displayed as two different “logical cores” that process separate threads when using hyper-threading technology. | Intel® Xeon® Haswell is available with at least 27 cores; at some locations, there is even an option to configure up to 51 cores.

Price | |

|---|---|

RAM | $0.0071/GB/hr. |

RAM | |

|---|---|

Price | $0.0071/GB/hr. |

IONOS Cloud uses cloud-optimized RAM. You can choose between 0.25 GB and 240 GB of RAM for your cloud server with dedicated core.

vCPU

Select up to 60 vCPUs per server.

Price | |

|---|---|

1 vCPU | $ 0.0310 / hr. |

RAM | $0.0071/GB/hr. |

1 vCPU | |

|---|---|

Price | $ 0.0310 / hr. |

RAM | |

Price | $0.0071/GB/hr. |

Configuration suggestions for vCPU instances

Use the following configuration suggestions for an easy way to deploy vCPU VMs. The full-flex option enables you to customize them at any time to suit your needs.

Number of vCPUs | RAM | Block Storage | Price from | |

|---|---|---|---|---|

S | 2 | 8 GB | Price according to selected size and type | $0.119/hr. |

M | 4 | 16 GB | Price according to selected size and type | $0.238/hr. |

L | 8 | 32 GB | Price according to selected size and type | $0.475/hr. |

XL | 16 | 64 GB | Price according to selected size and type | $0.950/hr. |

XXL | 32 | 128 GB | Price according to selected size and type | $1.901/hr. |

S | |

|---|---|

Number of vCPUs | 2 |

RAM | 8 GB |

Block Storage | Price according to selected size and type |

Price from | $0.119/hr. |

M | |

Number of vCPUs | 4 |

RAM | 16 GB |

Block Storage | Price according to selected size and type |

Price from | $0.238/hr. |

L | |

Number of vCPUs | 8 |

RAM | 32 GB |

Block Storage | Price according to selected size and type |

Price from | $0.475/hr. |

XL | |

Number of vCPUs | 16 |

RAM | 64 GB |

Block Storage | Price according to selected size and type |

Price from | $0.950/hr. |

XXL | |

Number of vCPUs | 32 |

RAM | 128 GB |

Block Storage | Price according to selected size and type |

Price from | $1.901/hr. |

Microsoft licenses for Compute Engine

Microsoft Windows Server (2016, 2019, 2022) | Price |

|---|---|

vCPU | $0.0159 / hr. |

Dedicated Core - AMD | $0.0318 /hr. |

Dedicated Core - Intel® | $0.0318 /hr. |

vCPU | |

|---|---|

Microsoft Windows Server (2016, 2019, 2022) | |

Price | $0.0159 / hr. |

Dedicated Core - AMD | |

Microsoft Windows Server (2016, 2019, 2022) | |

Price | $0.0318 /hr. |

Dedicated Core - Intel® | |

Microsoft Windows Server (2016, 2019, 2022) | |

Price | $0.0318 /hr. |

RedHat Enterprise Linux licenses for the Compute Engine

RedHat Enterprise Linux | Price |

|---|---|

RHEL 8/9 License Small Virtual Node (1-4 vCPUs/Cores) | $0.06/hr. |

RHEL 8/9 License Large Virtual Node (>4 vCPUs)/Cores | $0.13/hr. |

RHEL 8/9 License Small Virtual Node (1-4 vCPUs/Cores) | |

|---|---|

RedHat Enterprise Linux | |

Price | $0.06/hr. |

RHEL 8/9 License Large Virtual Node (>4 vCPUs)/Cores | |

RedHat Enterprise Linux | |

Price | $0.13/hr. |

RedHat Enterprise Linux | |

Price | |

The RHEL license fees are charged pay-as-you-go per hour or part thereof. There is no price difference between Intel and AMD servers.

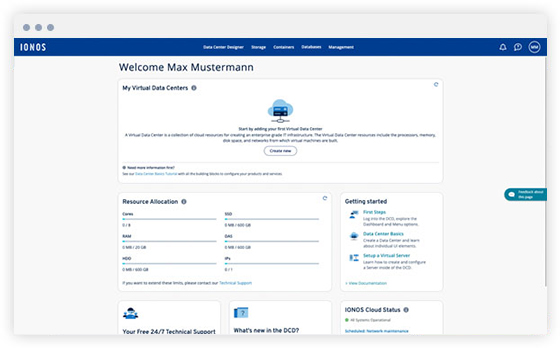

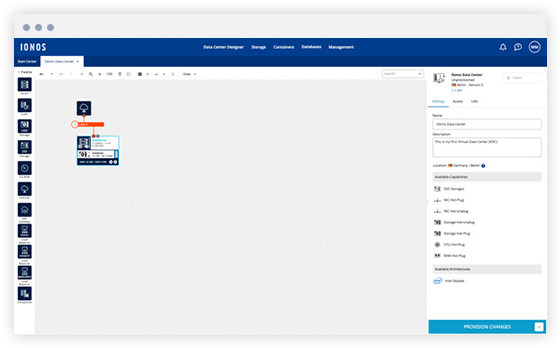

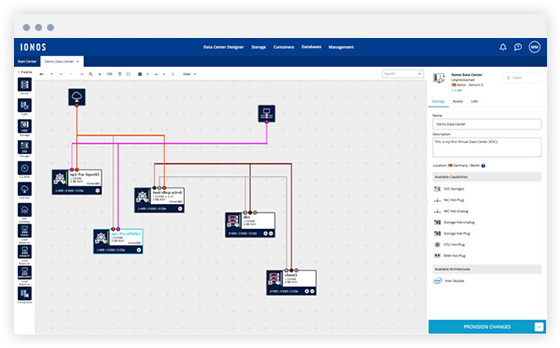

Get started

-

Quick registration: Create your admin account in just a few simple steps. It's secured with 2-factor authentication.

-

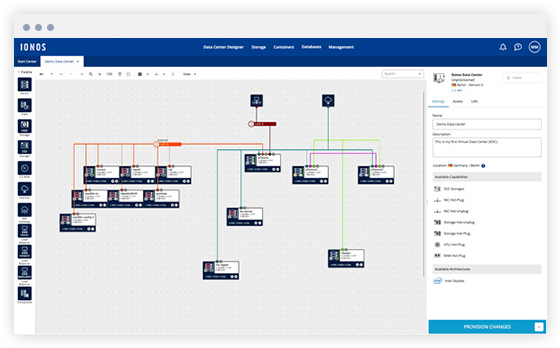

Simple setup: Configure your servers, memory and network connections via drag and drop in the Data Center Designer or API.

-

Ready to go: Once the configuration of your virtual data center is finished, you can start operating within a few minutes.

Success stories: Compute Engine solutions in practice

Find out how our customers benefit from the IONOS Cloud computing platform and in doing so make the most of their IT.

Resources

Getting started with Compute Engine

APIs & SDKs

Compute Engine

FAQ — frequently asked questions

Scalability, elasticity, and robustness are the most important characteristics of any cloud-based application. An enterprise cloud computing architecture must be designed based on these characteristics. In particular, it must be able to cope with strongly fluctuating loads on the virtual systems and enormous amounts of data, while at the same time making sure the operations run in an efficient and economical manner. Redundancy, regular snapshots, and backups are mandatory.

Measures for building a cloud architecture at enterprise level:

- Availability at cluster level or even as a high availability application including fail-over and continued data consistency

- Distribution of entities including mirroring of the applications and virtual data centers, and also with the help of geographical redundancy (in parallel operation of both the infrastructure and the programming of an application)

- Scalability without interrupting ongoing operations and should be as vertical as possible to save resources; in addition, enable the development of shared-nothing systems

- Automation, which must be made possible with the help of microservices, especially in times of agile software development and CI/CD

- Configuration management, security design, access management including logging or multi-client capability

- Step-by-step migration and preparation of the cloud for hybrid or multi-cloud operation

- Prevention of lock-in through proprietary cloud technologies

Backup and disaster recovery strategies are essential for businesses in order to minimize the impact of system failures. The IONOS Compute Engine provides you with an effective tool for this in the form of Acronis Cloud Backup.

Disaster Recovery

For the average company, data is crucial for working processes. Therefore, it should be backed up effectively so that it can be restored very quickly in case of an emergency. Successful disaster recovery means that efficient workflows can resume as soon as possible.

The advantages are clear:

- Short downtimes mean that a company can still be reached at practically any time, which leads to growing trust in the company and an improved brand image.

- A company's ability to conduct business is also maintained and

- financial losses are kept within reasonable limits.

- Helps companies comply with their obligation to keep accounting and financial data in accordance with data protection measures.

Business continuity and disaster recovery

Business Continuity Management comprises all measures that ensure that the ongoing operations of a company are affected as little as possible in extreme or catastrophic situations (e.g. power failure, pandemics, natural disasters) or can be restored to normal very quickly.

Disaster recovery strategies, on the other hand, refer to the rapid restoration of IT systems and stored data.

In order for disaster recovery to be successful, a plan for structured data recovery must be in place.

When deciding how best to proceed, the following questions could help:

- How much data may be lost? (Recovery Point Objective, RPO)

- Which data saving method do I choose and at what intervals should it be saved?

- How long can the system be down for before it becomes critical for the business? (Recovery Time Objective, RTO)

- What is the ideal location for backups?

- What types of backups are available and how are they best used?

- Full backup

- Differential backup

- Incremental backup

Data backup with Acronis Backup Cloud and the IONOS Compute Engine

The Compute Engine mirrors a so-called public cloud. But not all elements of an IT infrastructure need to be in the cloud: on-premises solutions are often combined with components in the cloud. Acronis Cloud Backup backs up all these components reliably. You can also use Acronis Cloud Backup for IT systems that are not in the enterprise cloud at all.

The advantage is that it is easy to use: In the user interface and API of the Compute Engine, you will find a button to access the Acronis backup console. Here you determine which servers or VMs are backed up, and with which saving method. This will make your work easier even if you move your system from "real" hardware to the cloud at a later point or simply partially move it: Access to the Acronis Backup Console is always located in the same place, no matter how much your system changes over time.

Acronis initially performs a full backup and then saves changes incrementally. In the event of an incident, the entire data backup can later be restored as a full backup – all at the push of a button. With Acronis Cloud Backup in the Compute Engine, you have a powerful tool at hand that helps you to protect all components of your IT system appropriately.

Data management is the sum of all technical, conceptual and organizational measures and procedures for the collection, storage and provision of data. This is so that various processes in companies can be optimally supported. Data management includes measures to ensure…

- Data quality

- Data consistency

- Data security

- Data encryption

- Data archiving

- Data access

- Data deletion

- Data life cycle management.

The various aspects and elements of data collection, data access, and data storage are also taken into account as part of data management.

The IONOS Compute Engine provides virtual data centers that the customer can configure according to their own requirements and are able to use like conventional data centers. In the cloud, there are no limits when it comes to implementing an internal data management guideline or following external compliance guidelines.

As a product of IONOS SE with headquarters and data centers in Germany, the IONOS Compute Engine is fully subject to the GDPR requirements and simultaneously offers maximum security against the US CLOUD Act.

The IONOS Compute Engine knows the importance of developing a strategy so that the customer can use the cloud as optimally as possible. Professional service cloud consultants take the customer by the hand and work with them to develop meaningful cloud architectures and test them using a proof-of-concept.

Customers use a large amount of scripts and best practice papers for migrating IT workloads. If a problem arises, customers turn to our professional 24/7 support, where they can receive detailed instructions from trained system administrators.

The IONOS Compute Engine is a so-called public cloud and offers infrastructure-as-a-service and platform-as-a-service.

Unlike a private cloud, the public cloud offers services available to the general public and not exclusively for a specific organization. A private cloud is either operated by the organization itself or provided exclusively by a service provider for this specific organization.

The use of a public cloud can be restricted by strict legal data protection regulations or by security concerns. In certain industries, regulation prohibits the transfer of data to external service providers who do not meet the strict data protection requirements. In such cases, either the IONOS Compute Engine (with maximum security under the US Cloud Act), a private cloud, or a hybrid cloud (a mixture of private and public clouds), is the best option.

The hybrid cloud offers combined access to the services of a public cloud and a private cloud depending on the requirements of the respective application. Compared to the public cloud, an advantage of the private cloud is that the performance of the services is influenced even less by other customers than in the kernel-centered virtualization of the IONOS Compute Engine.